Automated Tests

FIRST - Principles of Automated Testing

- Fast: Tests should run quickly to provide fast feedback.

- Independent: Tests should be isolated and not depend on each other to run.

- Repeatable: Tests should produce the same result every time they are run.

- Self-Validating: Tests should have clear asserts that validate expected behavior.

- Timely: Tests should be written early on in development, following TDD.

Test Patterns - Test Double

A test double is a pattern that aims to replace a DOC (depended-on component) in a certain type of test for performance or security reasons. EX: Must place an order with 3 dollar items Given a new order with 3 associated items, a $50.00 Book, a $20.00 CD and a $30.00 DVD When the order is placed Then a order confirmation should be returned containing the code, along with the total order amount of R$550.00, if the dollar exchange rate is R$5.50 and the status awaiting payment.

Dummy - Objects used to fill method parameters but which are not used.

function createOrder(user, items, dummy) {

// dummy is not used

// code that creates the order

}

test('creat order', () => {

const dummy = {};

createOrder(user, items, dummy);

});

Stub - Provides pre-defined answers for behavior isolation.

const currencyService = {

getDollarQuote: () => 5.5

};

function createOrder(items, currencyService) {

// uses the stub for dollar quote

}

test('create order', () => {

createOrder(items, currencyService);

});

Spy - Captures and records information about method calls.

const emailService = {

sendOrderConfirmation: jest.fn()

};

function createOrder(items, emailService) {

// calls the spy to verify

emailService.sendOrderConfirmation();

}

test('create order', () => {

createOrder(items, emailService);

expect(emailService.sendOrderConfirmation).toHaveBeenCalled();

});

Mock - Pre-programs behaviors and validates if they occurred as expected.

const paymentService = {

charge: jest.fn().mockResolvedValue('PAYMENT_OK')

};

function createOrder(items, paymentService) {

return paymentService.charge();

}

test('create order', async () => {

const result = await createOrder(items, paymentService);

expect(result).toBe('PAYMENT_OK');

});

Fake - Simulated implementations that emulate real behavior.

class FakeDatabase {

constructor() {

this.orders = [];

}

addOrder(order) {

this.orders.push(order);

}

}

function persistOrder(order, database) {

database.addOrder(order);

}

test('persist order', () => {

const database = new FakeDatabase();

persistOrder(order, database);

expect(database.orders).toContainEqual(order);

});

Test Types

Unit

Tests smaller code units in isolation.

Tools:

Integration

Tests the integration between application modules and layers

request -> routes-> controller -> repository -> controller -> response

Narrow integration tests

- Exercise only the part of the code in my service that communicates with a separate service

- Use stubs/mocks of those services, both in the same process or remotely consist of various narrow-scope tests, usually no greater in - - - Scope than a unit test (and usually run with the same test frameworks used for unit testing)

Broad integration tests

- Require real versions of all services, requiring a substantial test environment and network access;

- Exercise code paths across all services, not just code responsible for interactions;

Tools:

Same as for unit testing

End-to-End (E2E)

Simulates the complete application flow from the user.

Tools:

DETOX

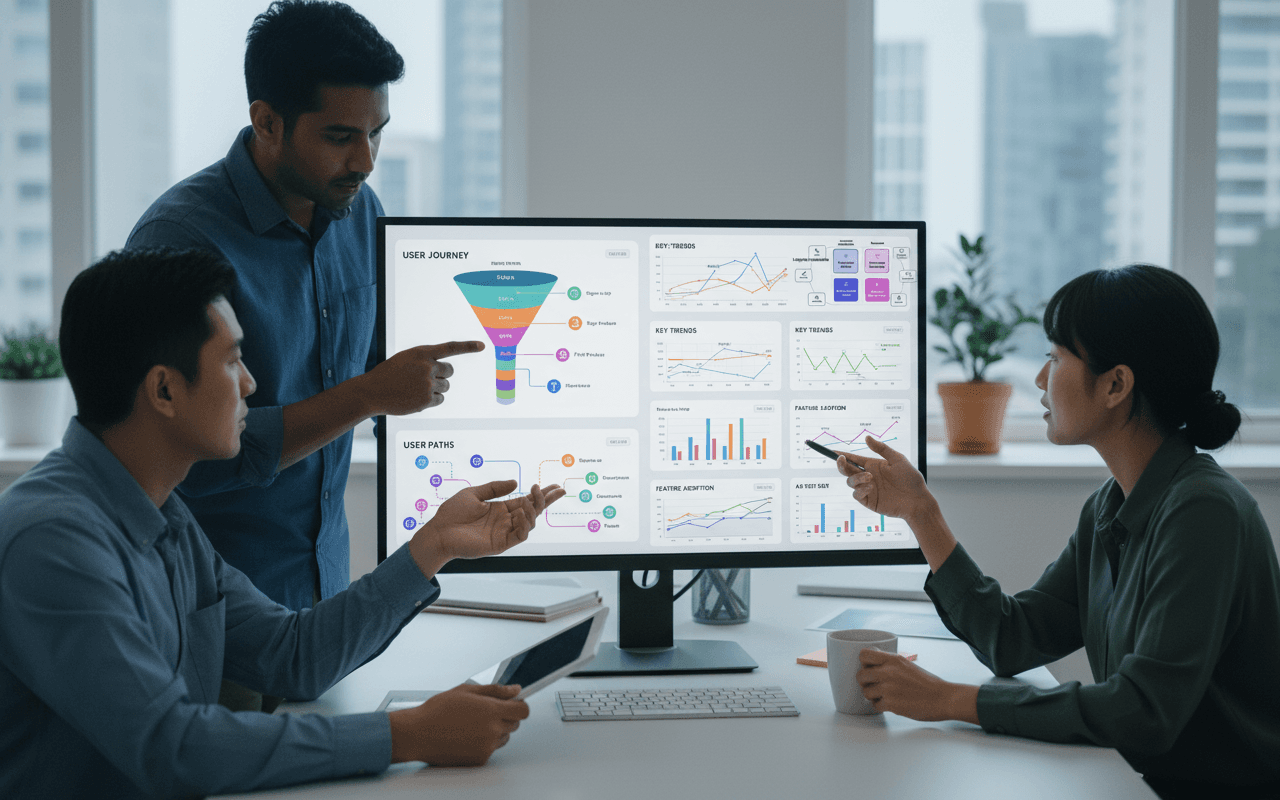

A/B Tests

A/B testing (also known as split testing) is a way to compare two versions of an application or webpage to see which performs better. The goal is to improve key metrics like conversion rates, engagement, etc.

During an A/B test, the original version (A) and a new variant (B) are shown to different segments of users. Their interactions and behaviors with each version are measured and statistical analysis is used to determine if B outperforms A.

How it works:

- Identify a key metric to optimize (e.g. signups, purchases)

- Create a variant (B) to test against the original (A)

- Show A and B to different user groups

- Collect data and compare metrics between A and B

- Determine whether to keep, discard or run another iteration of B

A/B testing is commonly used for testing changes like different UI designs, page layouts, call-to-action buttons, email subject lines and more.

Tools:

Testing Techniques

- White box - Based on source code, observes internal data flow

- Black box - Based on requirements, tests application from user's point of view

- Regression - Re-runs tests to detect errors after changes

- Usability - Tests experience and behavior from the user's point of view

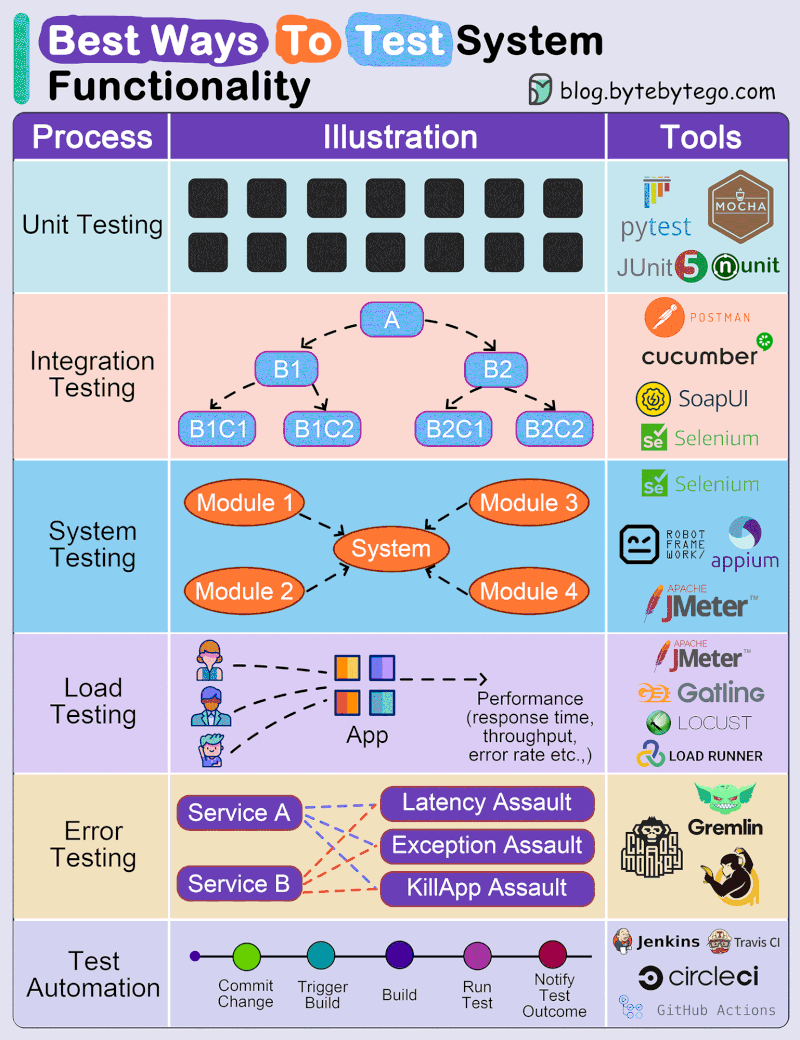

Test Systems

-

Unit Testing: Ensures individual code components work correctly in isolation.

-

Integration Testing: Verifies that different system parts function seamlessly together.

-

System Testing: Assesses the entire system's compliance with user requirements and performance.

-

Load Testing: Tests a system's ability to handle high workloads and identifies performance issues.

-

Error Testing: Evaluates how the software handles invalid inputs and error conditions.

-

Test Automation: Automates test case execution for efficiency, repeatability, and error reduction.

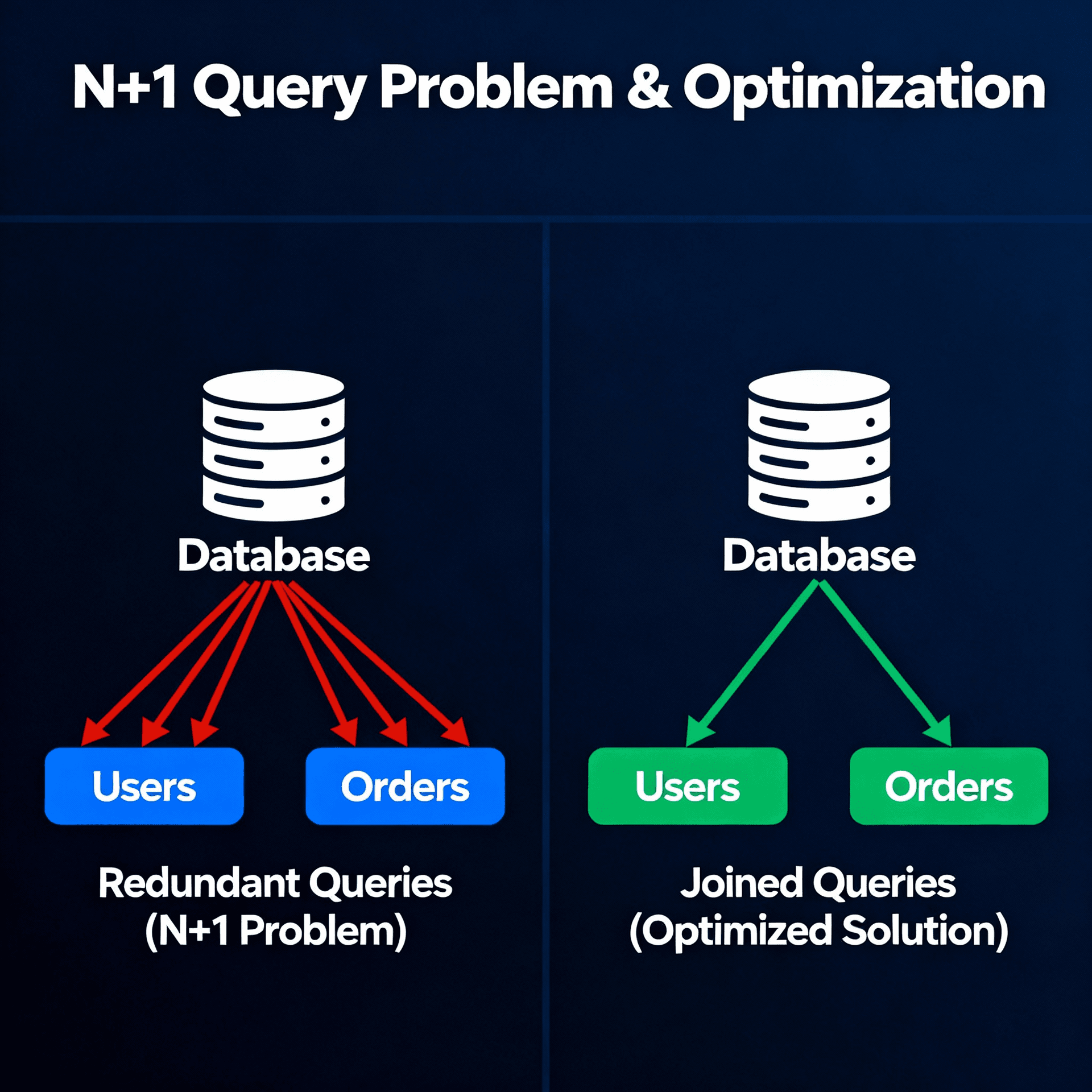

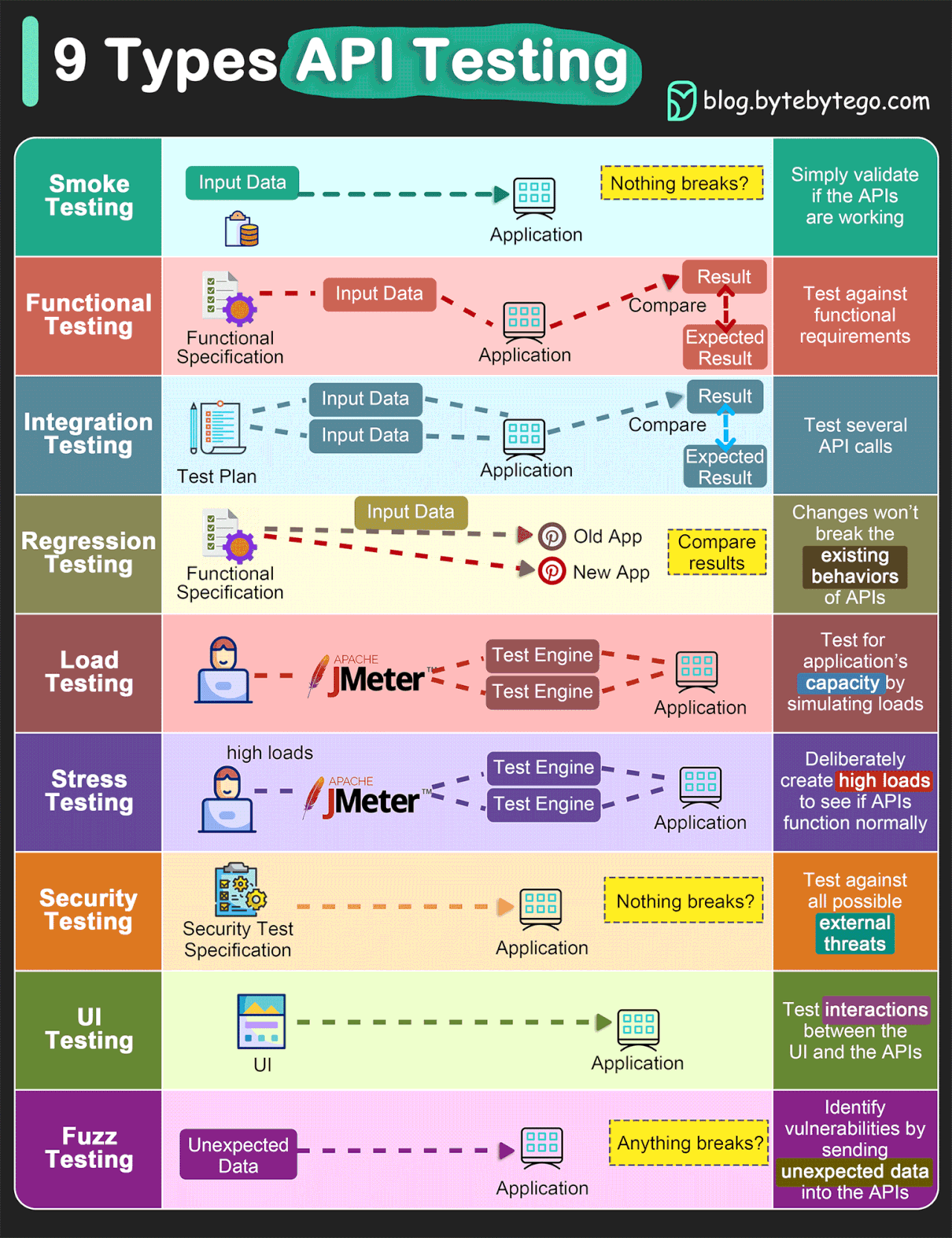

Testing APIs

- Smoke Testing This is done after API development is complete. Simply validate if the APIs are working and nothing breaks.

- Functional Testing This creates a test plan based on the functional requirements and compares the results with the expected results.

- Integration Testing This test combines several API calls to perform end-to-end tests. The intra-service communications and data transmissions are tested.

- Regression Testing This test ensures that bug fixes or new features shouldn’t break the existing behaviors of APIs.

- Load Testing This tests applications’ performance by simulating different loads. Then we can calculate the capacity of the application.

- Stress Testing We deliberately create high loads to the APIs and test if the APIs are able to function normally.

- Security Testing This tests the APIs against all possible external threats.

- UI Testing This tests the UI interactions with the APIs to make sure the data can be displayed properly.

- Fuzz Testing This injects invalid or unexpected input data into the API and tries to crash the API. In this way, it identifies the API vulnerabilities.

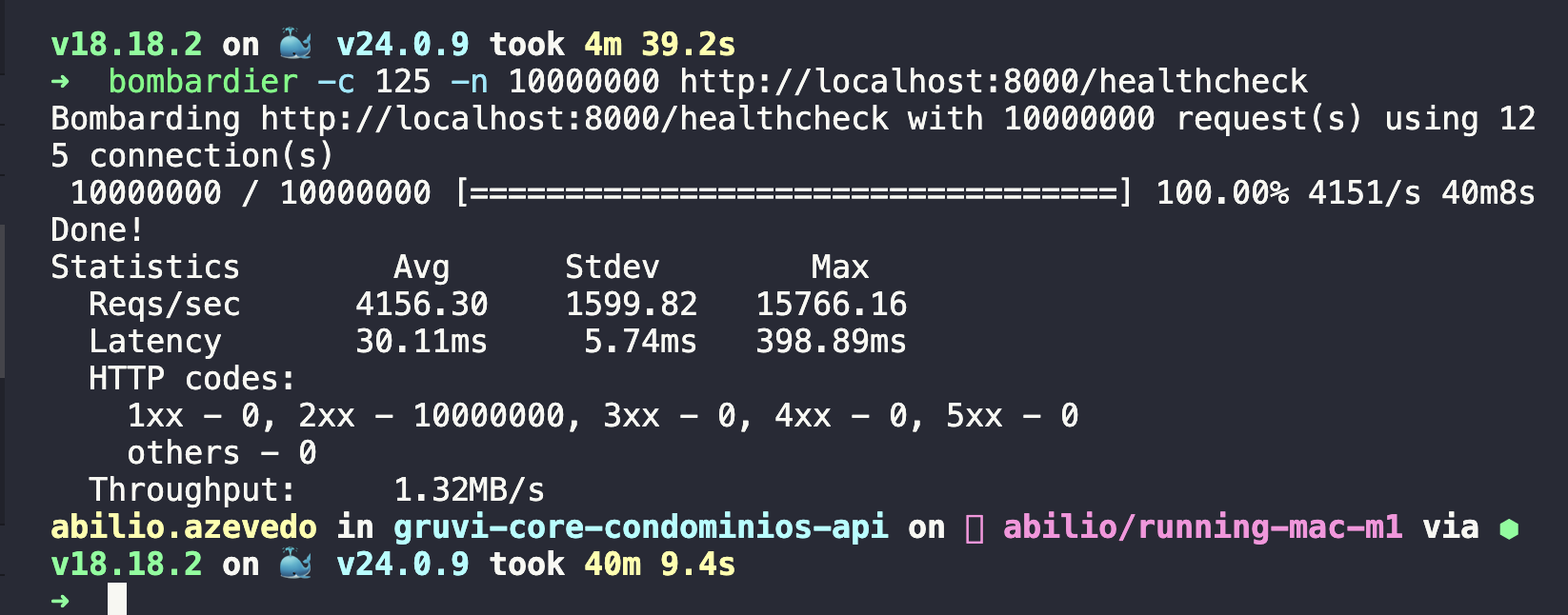

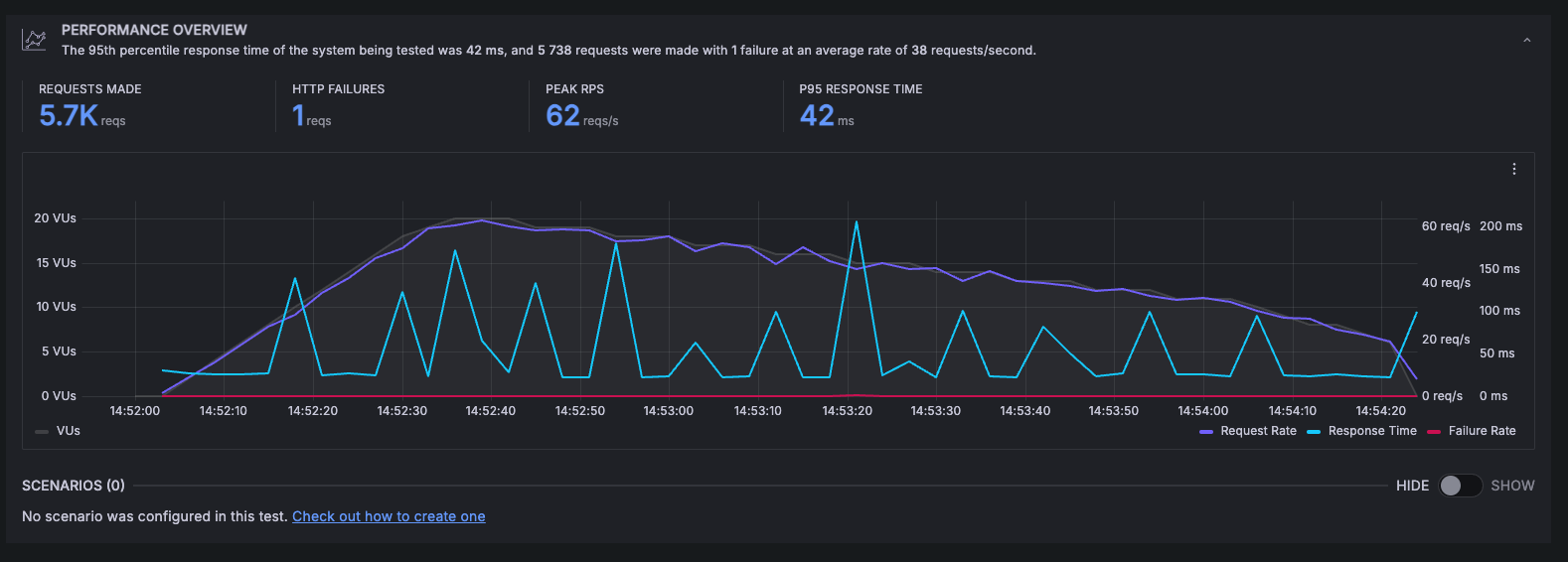

Load Test

Load testing is an important part of validating the performance and reliability of APIs under heavy usage.

Defining requirements is crucial for effective load testing. Consider the expected production workload and target metrics like max throughput, concurrent users, and acceptable response times.

Determine the APIs and scenarios to test. Prioritize critical user journeys and high traffic endpoints. Outline the datasets to use for parameters and request bodies to simulate real data.

- Bombardier is a simple CLI tool for performing load tests by bombarding an API with requests. It can simulate many concurrent connections to test throughput. Bombardier is quick and easy to set up for basic load tests.

- Grafana K6 takes load testing to the next level for complex scenarios. You can write scripts using JavaScript to define flexible test scenarios. K6 can simulate thousands of virtual users with real browser behaviors. The Grafana integration provides visualization and monitoring.

- JMeter allows recording scenarios, parameterization, assertions to validate responses, and detailed reporting. The plugin ecosystem provides many extensions.

Here are some important parameters for load testing:

-

VU (Virtual Users) - Number of simulated users that will be accessing the application at the same time. More VUs means higher load.

-

Ramp-up - Time required to increase the number of VUs to the desired level. A slower ramp-up is more realistic.

-

Duration - Time that the test will run at maximum load. Longer durations make the test more reliable.

-

Throughput - Rate of successful request transfers. Even under high load, throughput should not drastically decrease.

-

Latency - Application response time. Latency should remain low and stable.

-

Errors - Rate of failed requests. Errors should not increase with higher load.

-

Concurrency - Number of users accessing the application simultaneously. Tests should reach near max supported concurrency.

-

Ramp down - Time to decrease load after tests.

Monitoring these parameters during load tests is essential to evaluate application behavior and scalability.

Testing Tips

No matter which framework you use, the following tips will help you write better UI components, more testable, readable and composable:

- Favor pure components for UI code: given the same props, always renders the same component. If you need app state, you can encapsulate these pure components in a container component that manages state and side effects.

- Isolate business logic/business rules in pure reducer functions.

- Isolate side effects using container components.

Keep these buckets isolated:

- Display/UI Components

- Program Logic/Business Rules - the things that deal with the problem you are solving for the user.

- Side Effects (I/O, network, disk, etc.)

References

- https://martinfowler.com/testing/

- https://martinfowler.com/articles/mocksArentStubs.html

- https://blog.cleancoder.com/uncle-bob/2014/05/14/TheLittleMocker.html

Embedded content: https://docs.google.com/presentation/d/1YHgBQ0JJ-x5mIm5jaWv_xeVDusS0YpwAPvjfQdqM_YU/edit?usp=sharing