Artificial intelligence

Fundamentals of AI

AI Challenges

- Scarce data

- Lack of data quality

- Interpretability

- Explainability

- Social and ethical risks

Basic Statistics

01. What is Statistics?

02. Population and Sample

03. Variable Types

04. Central Limit Theorem

The central limit theorem is one of the main theorems in statistics which states that when you take multiple random samples from a population and calculate the mean of each sample, regardless of the shape of the original distribution, these sample means will approach a normal distribution (bell curve) as the sample size increases.

The central limit theorem is important because it allows us to make inferences about a population based on a sample. For example, if you know the sampling distribution of the mean is normal, you can use a normal distribution table to calculate the probability that the sample mean is greater or less than a certain value.

05. Measures of Central Tendency

06. Measures of Dispersion

07. Measures of Shape

08. Correlation

Correlation in statistics measures the relationship between two variables, indicating if they have a positive linear association (increase together), negative (one increases while the other decreases) or no correlation. The importance for Machine Learning algorithms lies in the ability to identify patterns and relationships between variables.

Correlation helps select relevant features for models, improving accuracy and interpretability. It also allows adjusting models to predict more accurately based on observed relationships in the data.

09. Graphical Representations

EDA - Exploratory Data Analysis

The objective of Exploratory Data Analysis (EDA) is to take a good look at the data before starting more complicated things. It's like the curious investigator who first looks to understand what it's all about. The analysis helps uncover hidden secrets in the numbers, strange patterns and even errors, so we can make smarter decisions and tell more interesting stories with our data. It's like the first clue in a giant puzzle of information.

What is the Pandas library?

Pandas is a Python library widely used for data analysis. Its main advantage is its ability to manipulate, clean and efficiently analyze data. It provides flexible data structures like Series and DataFrames that allow organizing data in tables, performing complex operations like filters and aggregations, and easily visualizing results. Pandas is also compatible with various data sources like CSV files, Excel and databases, making it essential for data scientists and analysts who want to explore and extract insights from data in an effective and intuitive way.

Missing Data Types

Formulating Hypotheses

Univariate Analysis

Univariate analysis is a statistical approach that focuses on the analysis of a single variable in a dataset. It aims to understand the individual characteristics of that variable by examining its distribution, summary measures (like mean and median), variability and the presence of outliers. This helps gain a detailed insight into the features of a specific variable before exploring relationships with other variables (bivariate or multivariate analysis) during data analysis.

Bivariate Analysis

Bivariate analysis is a statistical technique that focuses on the relationship between two variables in a dataset. It seeks to understand how one variable is related to the other, often using charts, crosstabs and correlation calculations. This helps identify patterns, associations and dependencies between the two variables, providing insights on how they interact, which is crucial for data analysis and informed decision making.

Outliers

An outlier is a data point that is very different from the other data points in a dataset. It is like a point outside the curve.

For example, imagine you have a dataset that records the height of 100 people. The average height is 1.70 meters. An outlier would be a person who is 2.50 meters tall. This person is much taller than the others, so they are considered an outlier.

Outliers can be caused by various factors like measurement errors, incomplete data or random events. They can affect the results of data analysis, so it's important to identify them and deal with them properly.

Dealing with Outliers

Automating EDA

Automating exploratory data analysis (EDA) offers several advantages including:

-

Increased speed and efficiency: Automated EDA can be performed much faster than manual EDA. This can be important for large or complex datasets.

-

Reduced subjectivity: Automated EDA is less prone to human errors or biases. This can lead to more accurate and reliable analysis.

-

Better data understanding: Automated EDA can identify patterns and trends that may not be obvious to human analysts. This can aid in obtaining a fuller understanding of the data and making better decisions.

Machine Learning

Machine learning is a field of Artificial Intelligence that deals with the ways systems can learn to simulate human learning, with gradual and continuous improvement through experience.

The algorithms that are built learn through errors automatically, with minimal human intervention, and after training (or "rehearsing") they can identify patterns, make predictions, make decisions, all based on the data collected.

Algorithm Types

Supervised Learning

Reinforcement Learning

Dimensionality

The Curse of Dimensionality was termed by mathematician R. Bellman in his book "Dynamic Programming" in 1957. The curse of dimensionality states that the amount of data you need, to achieve the desired knowledge, scales exponentially with the number of attributes required.

In short, it refers to a series of problems that arise when working with high dimensional data. The dimension of a dataset corresponds to the number of existing features in a dataset.

Feature Engineering and Selection

Feature engineering is a fundamental step in the machine learning model development process. It refers to the process of selecting, extracting, transforming or creating new variables (features) from the raw data to improve model performance. Good feature engineering can make a model more accurate, efficient and interpretable.

Feature engineering is an iterative process. AI/ML experts typically start with an initial feature set and then test different feature combinations to determine the best configuration for the model.

Overfitting and Underfitting

-

Underfitting occurs when a machine learning model is too simple to learn the relationship between variables in the training data. This can result in a model unable to make accurate predictions on new data.

-

Overfitting occurs when a machine learning model learns the relationship between variables in the training data too closely, including noise in the data. This can result in a model that fits the training data well, but fails to generalize to new data.

Bias-Variance Tradeoff

The bias-variance tradeoff describes the relationship between a model's ability to learn from data and its ability to generalize to new data.

Bias is the systematic error a model makes when learning from data. It occurs when the model cannot learn the true relationship between the predictor and response variables.

Variance is the variability in the results of a model applied to different data sets. It occurs when the model is too complex or when the training data is insufficient.

Low Bias and Low Variance Is the ideal model with good accuracy and precision in predictions.

Low Bias and High Variance The model is overfitting the training data and does not generalize well to new data.

High Bias and Low Variance The model is underfitting the training data and does not capture the true relationship between the predictor and response variables.

High Bias and High Variance The model is inconsistent and has very low accuracy in predictions.

Model Validation

Always check:

- Interpretability

- Fairness

- Efficiency

- Safety

Model Ensembling

Model ensembling is a machine learning technique that combines the predictions from multiple models to improve overall performance. This technique is based on the principle that combining models can help reduce bias and variance, leading to more accurate predictions.

These techniques are often used in machine learning competitions, where combining models can provide a critical advantage. However, it's worth noting that ensembles can increase complexity and training time, so it's always good to consider the performance vs. complexity tradeoff.

AI/ML Project Structure

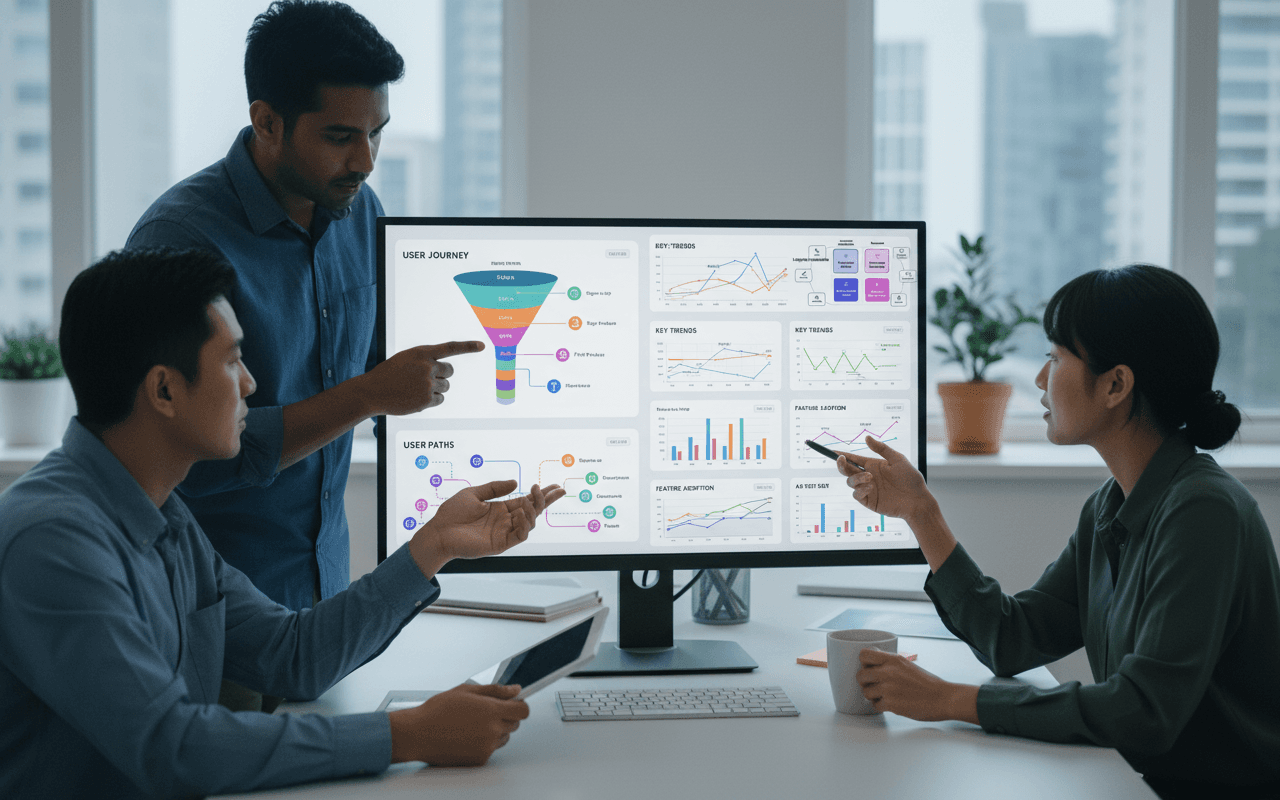

Adopting a methodology for AI/ML projects is essential to structure and standardize the development process, ensuring each phase is approached in a systematic and comprehensive way. A methodical approach not only facilitates identifying and correcting flaws like overfitting, but also promotes reproducibility, allowing other data scientists and engineers to easily replicate the work.

In addition, this structure optimizes iteration and model refinement, and facilitates documentation and communication with stakeholders, ensuring transparency, collaboration and efficiency throughout the project.

CRISP-DM

Cross Industry Standard Process for Data Mining

It was created in 1996 and became the most widespread methodology in data science for use in AI/ML projects.

CRISP-DM is cyclical, meaning it is common to return to previous steps as we move forward in the project, allowing continuous refinements until the desired result is achieved.

Its use with Lean methods generates value deliveries for the Customer, in the "Fail Fast, Learn Faster" concept.

ML Canvas

It was created in 2016 as a tool to help teams and stakeholders plan and communicate the key aspects of a machine learning project in a clear and concise way.

The concept was inspired by the Business Model Canvas, but adapted specifically for the unique challenges and components of machine learning projects.

The ML Canvas has been used by professionals in the field to structure and plan ML initiatives, helping ensure all key elements are considered and understood by all parties involved.

machinelearningcanvas.com by Louis Dorard, Ph.D.

AI Canvas

It was created in 2018 by University of Toronto professors to help people make better decisions and structure projects with the aid of AI/ML.

It also uses a framework similar to the Business Model Canvas, but places greater emphasis on the human question, capturing the judgment that will be made about predictions, the actions that need predictions, and feedback for continuous model improvement.

Models

Regression Analysis

Regression analysis is a statistical approach that seeks to investigate and quantify relationships between variables. It is used to understand how a dependent variable (target) is related to one or more independent variables (factors believed to influence the dependent variable).

This technique allows building a mathematical model, usually in the form of a linear equation, representing this relationship. By fitting the model to observed data, we can estimate parameters and understand how changes in the independent variables affect the dependent variable.

This is key to making predictions, evidence-based decision making and better understanding patterns in real-world data.

Regression Types

Simple Linear Regression

The goal of this module is to conceptually introduce the main regression algorithms so we can develop machine learning projects that make value predictions. And we will do a project exploring the first of these algorithms, which is simple linear regression, where we will go through the full process from EDA to delivering the model via an API for consumption by other applications.

You can find the final project here.

Used Tools

-

FastAPI - A modern, high-performance web framework for building APIs with Python.

-

joblib - Provides utilities for saving and loading Python objects to enable caching and data sharing between Python processes. Used to read the pkl files that holds the trained model. Python pickle files may have the extension ".pickle" or ".pkl". A Python pickle file serializes a tuple of two numpy arrays, (feature, label).

-

uvicorn - A lightning-fast ASGI server implementation for Python.

-

pydantic - Data validation and settings management using Python type hints.

Multiple Linear Regression

You can find the final project here.

Tools Used

- Gradio allows creating interactive graphical user interfaces for machine learning models in a quick and simple way.

Polynomial Regression

A polynomial is a mathematical expression representing the sum of terms, each consisting of variables raised to non-negative integer exponents, multiplied by coefficients. For example, 3x^2 + 2x - 5 is a polynomial, where 3x^2, 2x and -5 are the terms. The coefficients are the numbers multiplying the variables, like 3 and 2 in the example. The exponents, like 2 in the term 3x^2, indicate the degree of each term. The degree of a polynomial is the highest exponent among its terms.

Polynomials are fundamental across many areas of mathematics and science, being used to model relationships, solve equations, and describe numerical patterns.

Polynomial features are created and used to train the linear regression model.

Before going straight to polynomial regression let's test the linear model to validate if we will have overfitting.

You can find the final project here.

Tools Used

- Streamlit is a Python library that lets you create web apps for machine learning quickly and easily, without needing to know front-end.

Train Models with your own data

Here is the English translation:

Fine-tuning

Involves taking a pre-trained AI model, like GPT-3, and training it a little bit more on a new dataset or specific task. This updates the model's synaptic weights, allowing it to better adapt to the new task, but usually without drastically altering the long-term memory that was pre-built during pre-training.

Semantic search

-

Embeddings: The text of the queries and documents is converted into embeddings, which are dense vector representations that capture the semantic meaning of words and phrases. This can be done with pre-trained models like Word2Vec or BERT.

-

Indexing: The embeddings are indexed in a specialized structure, like a tree or graph, that allows approximate search based on semantic similarity.

-

Query: When receiving a user query, its terms are converted into embeddings by the same logic.

-

Approximate search: The query embedding is compared to the indexed documents by meaning, instead of exact words. The most semantically similar are returned.

-

Ranking: The results can be ranked by relevance using the semantic similarity to the query. So the most relevant appear first.

You can find the final project here.

Tools Used

-

OpenAI API: The OpenAI API provides access to models like GPT-3 for generating text, summarizing content, translating languages and more through a subscription-based service.

-

LangChain: LangChain is an open-source AI assistant that fine-tunes language models like GPT-3 and Bloom for goal-oriented conversations using thought-chain prompting.

Natural Language Processing - NLP

Natural Language Processing (NLP) is a field of artificial intelligence that deals with the interaction between computers and human natural languages. The goal is to develop methods and systems that allow computers to understand, interpret and derive meaning from human text and speech.

Some common NLP tasks include:

-

Syntax analysis: Identify the grammatical structure of sentences

-

Semantic analysis: Extract the meaning of words and phrases

-

Machine translation: Translate text between languages

-

Automatic summarization: Generate concise summaries of long documents

-

Natural language generation: Produce new coherent text

-

Speech recognition: Transcribe and interpret human speech

The main approaches in NLP involve the use of computational linguistics, machine learning (particularly deep learning) and statistical models. Applications include search engines, chatbots, virtual assistants and much more. It is a very active research area today.

SpaCy

SpaCy is a very popular Python package for natural language processing (NLP). Some features and capabilities of SpaCy:

-

Syntactic parser that identifies the grammatical structure of sentences. It does morphological, syntactic and dependency parsing.

-

Pre-trained statistical models for various languages that can be used for common NLP tasks.

-

Efficient tokenization of text into words/tokens.

-

Lemmatization to group different inflected forms of words.

-

Named entity recognition (people names, organizations, locations etc).

-

Vectorization of texts into dense numerical word and document representations.

-

Integration with pre-trained Word Vectors like Word2Vec and GloVe.

-

Easy to use Python APIs that make common NLP tasks convenient.

-

High performance and ability to handle large volumes of text.

-

Utilities to train customized models for specific tasks.

-

Applications in text classification, sentiment analysis, translation, semantic search, chatbots, relation extraction and more.

Here is the English translation:

Project to predict review rating

In this project we created an API that receives a review text and predicts a rating from 1 to 5. You can find the final project here.

Codebase

You can find all codebase here.

References

-

OpenAI Cookbook: Example code and guides for accomplishing common tasks with the OpenAI API. To run these examples, you'll need an OpenAI account and associated API key (create a free account here).

-

Jupiter by Rocketseat is a cloud centralized upload system using CloudFlare R2 for audio and video storage. Postgress database in cloud serveless neon. It uses Upstash for http request queueing.

-

Houston by Rocketseat is a chatbot built with GPT-3, showing how to create a conversational agent with AI.

-

Qdrant is a database specialized in semantic search, allowing queries based on meaning rather than exact match. Useful for AI applications.

-

Whisper-jax is a library for self-supervised speech recognition. An example of advanced NLP techniques.

-

Beam is a serverless platform that runs AI models without needing to provision servers. Makes deploying AI applications easier.

-

Drizzle ORM and Hono are web frameworks that integrate AI capabilities like semantic search, sentiment analysis, etc.