Docker

Docker

Docker emerged in 2013 as an open source tool to facilitate the creation and deployment of applications in containers, solving many common problems related to consistency and portability in development and production environments.

Before Docker, it was very difficult to ensure that a development environment was identical to the production environment. This caused the famous "works on my machine" problems. In addition, bringing up new environments and provisioning machines was slow and tedious.

Docker solves these problems by packaging applications along with their dependencies into isolated and portable containers. This ensures consistency and speed of deployment, allowing developers to work locally in the same way that the application is deployed in production.

The Docker company creates products to monetize around the Docker ecosystem.

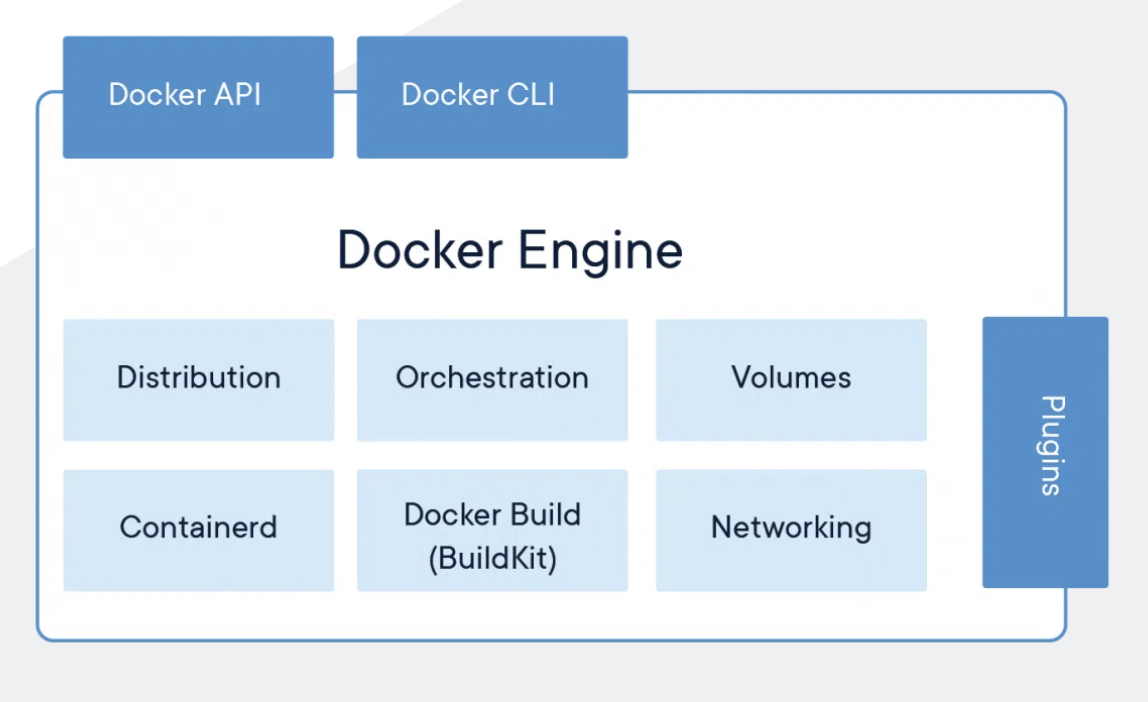

Docker Engine

- Build Images - Docker Build (Build Kit)

- Distribute the Images

- Run Containers - Containerd (Docker Runtime)

- Communication between Containers - Network

- File Sharing - Volumes

- Orchestration between Containers

This Engine runs through the Docker Desktop application which was made for Linux. So on Mac and Windows it runs on a Linux virtual machine to run the Docker Engine.

But it is also possible to run it through other applications such as colima.

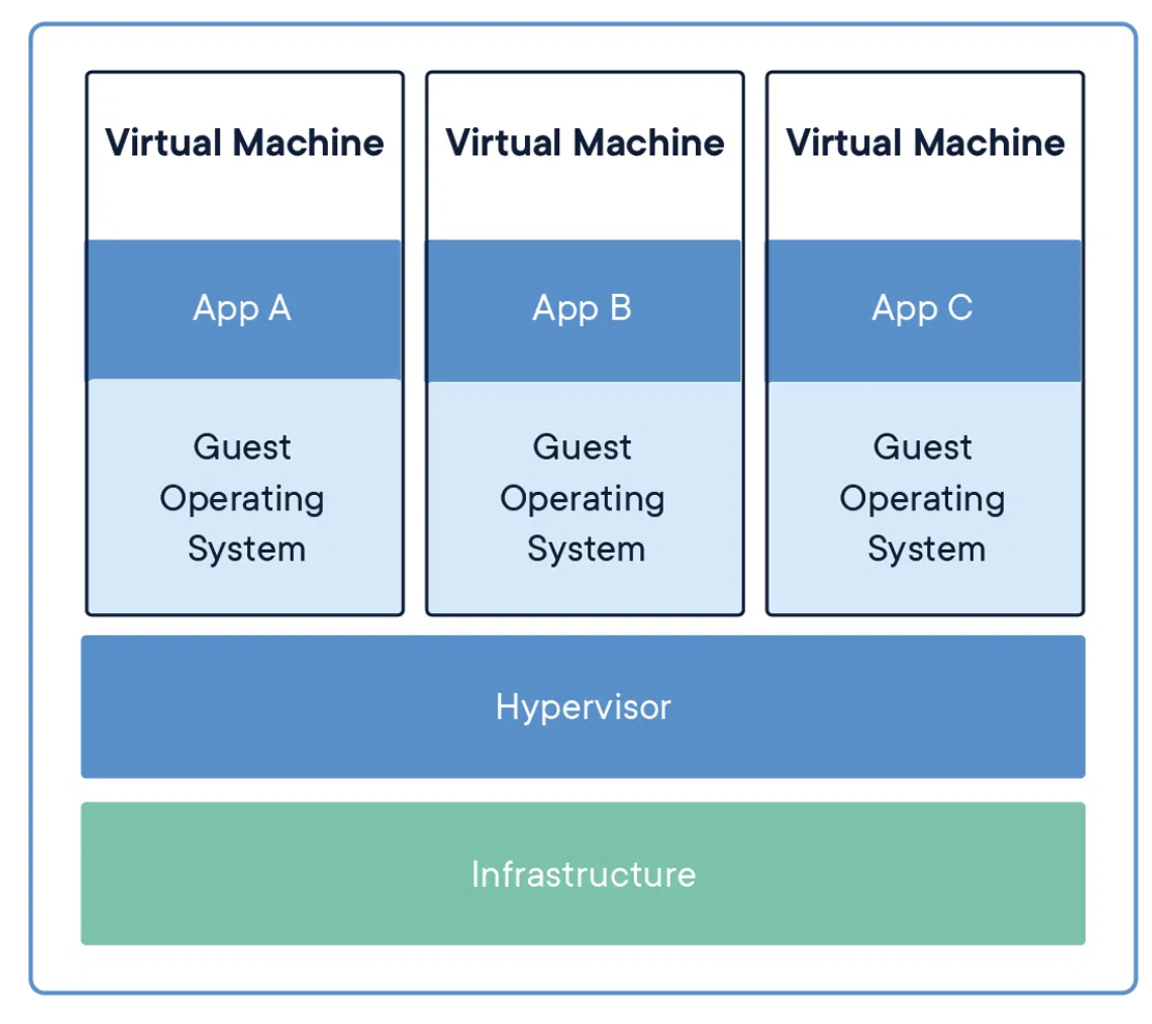

Virtual Machines

Virtual machines (VMs) are an abstraction of physical hardware that turns one server into multiple servers. The hypervisor allows multiple VMs to run on a single machine. Each VM includes a full copy of an operating system, the application, necessary binaries and libraries – taking up tens of GBs. VMs can also be slow to boot up and maintaining (updating) them is more costly.

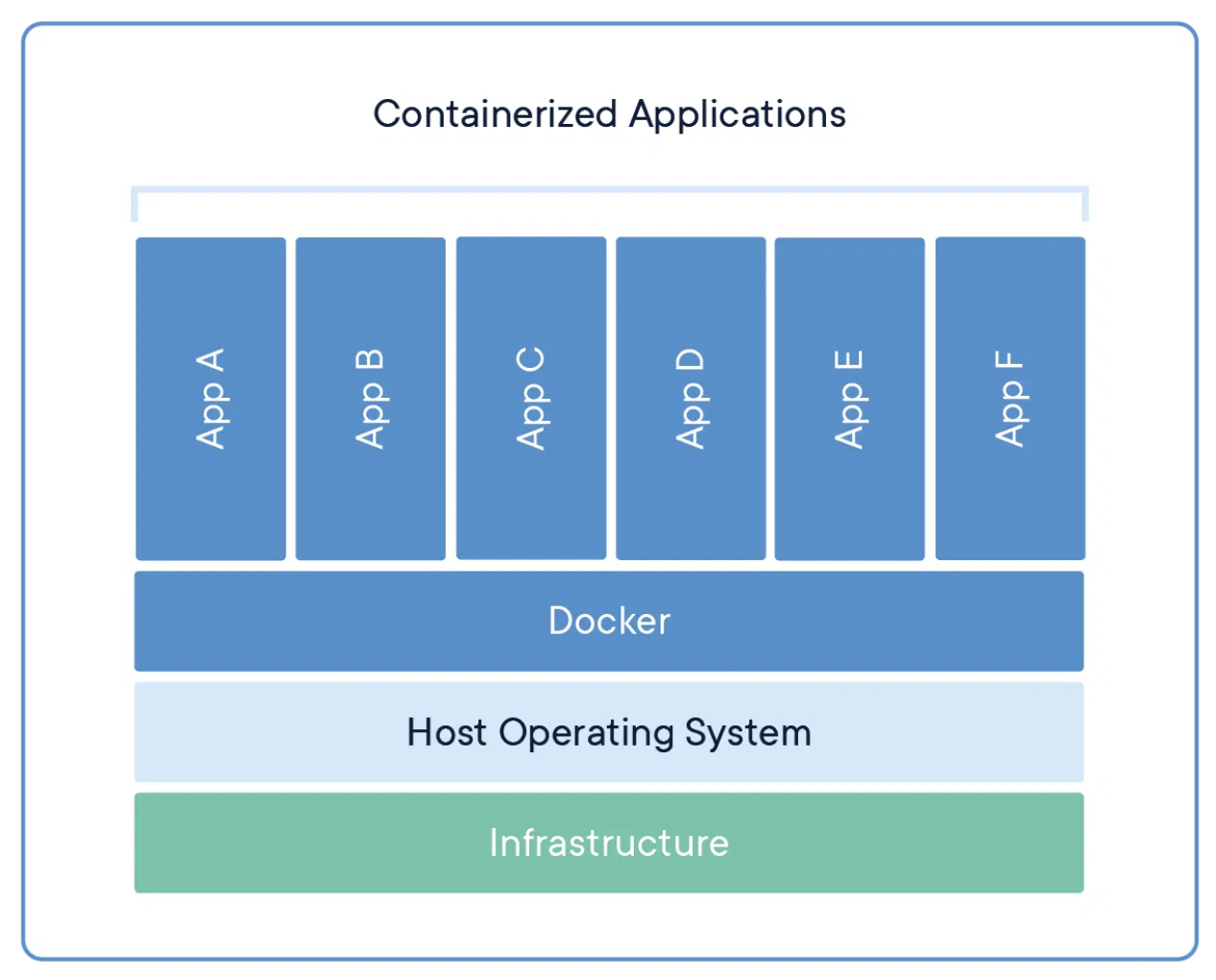

Containers

Containers are an evolution/optimization of virtual machines. They are an application layer abstraction that packages code and dependencies. Multiple containers can run on the same machine and share the kernel with other containers, each running as isolated processes in user space. Containers take up less space than VMs (container images are usually tens of MBs), can handle more applications and require fewer VMs and operating systems.

Run a new container:

docker run -p <hostPort>:<containerPort> image-name

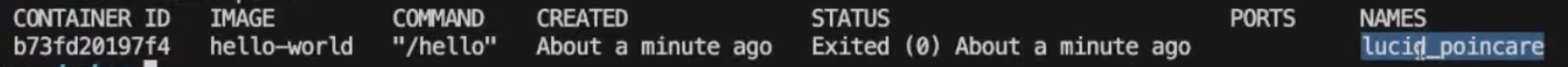

Show all containers that are running and with the "-a" flag (all) also shows those that are not currently running.

docker ps -a

Run a command inside the container iteratively (flag -i), with a communication interface (flag -t for teletype terminal or tty) and using root user (flag -u):

docker run -it -u root ubuntu bash

Download images from Docker Hub:

docker pull nginx

Redirect container ports to ports on your machine:

docker run -p 8080:80 nginx

NOTE: Using higher ports on the computer so you don't have to run SUDO admin command. The first port is the one exposed to the computer and the second is the container.

Execute a command in a running container:

docker exec container_name ls

The --rm flag in Docker is used to automatically remove the container when it exits. Here is an example running a Docker container with --rm:

docker run --rm ubuntu echo "Hello World"

docker run:

- Runs a command in a new container. This creates a new container instance from the given image and prepares it for running the given command. Once the command exits, the container stops.

- Essentially docker run runs a new, one-off container for running the specified command. The container is temporary and removed after running.

docker exec:

- Runs a command in an existing running container. This allows you to execute additional commands or a new interactive shell inside a running container.

- The container keeps running after the docker exec command completes. It does not create a new container, it simply lets you issue more commands to an already running container.

Volumes

When a container dies we lose the changes inside it, because when it is reassembled, it follows the image specifications. To work around this, we use shared volumes.

Share volumes between the container (/usr/share/nginx/html) and your machine (current_folder/html):

docker run -p 8080:80 -v $(pwd)/html:/usr/share/nginx/html nginx

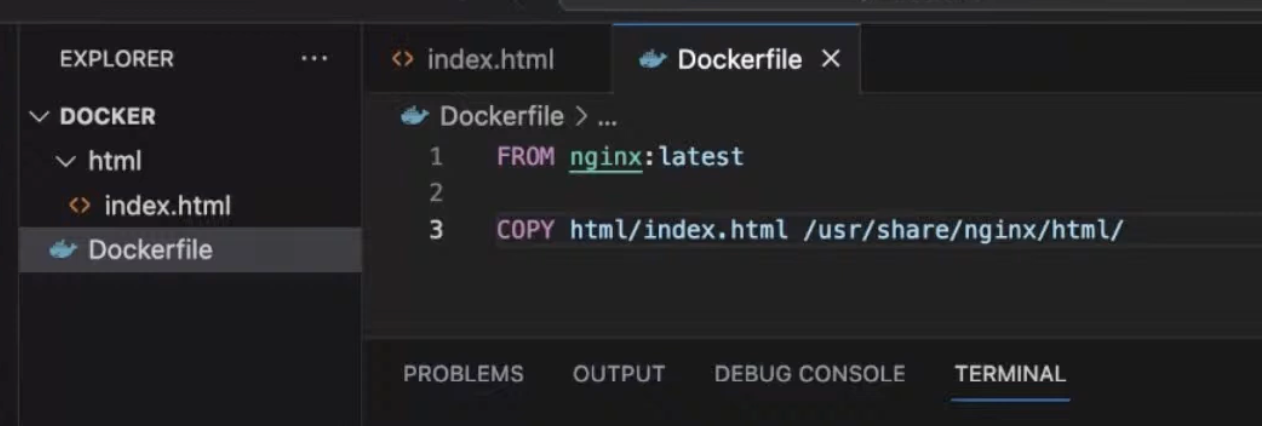

Dockerfile

The Dockerfile is a recipe for building automatic Docker images. It allows you to define a standardized and immutable environment for applications, ensuring that they are always run the same way, regardless of where they are deployed. We always start from a base image. The larger your image, the slower it will take to upload your container.

We can add a stage to the build steps:

# Base image

FROM node:16-alpine AS base

# Install dependencies apenas nesta etapa

WORKDIR /app

COPY package*.json ./

RUN npm install

# Stage de build

FROM base AS build

COPY . .

RUN npm run build

# Stage de produção

FROM node:16-alpine

WORKDIR /app

# Copia apenas o necessário

COPY --from=build /app/package*.json ./

COPY --from=build /app/dist ./dist

# Use o usuário padrão node

USER node

CMD [ "node", "dist/index.js" ]And run with the --target build flag to go to the desired stage:

docker build --target build2

It is important to define the USER to restrict image user permissions. If there is volume synchronization, it is a good idea to set the same USER that you use in development on your machine with the container's USER so you don't need to change files with SUDO.

Image

It is the container template, it is the descriptive of the container instance. It is like a class (specification) of the container.

Build an image based on the Docker File:

docker build -t kibolho/nginx:latest .

Docker Hub

Docker Hub is a public registry of Docker images. It allows developers to share and reuse components already built by others, avoiding the need to create everything from scratch. You can pull existing images or upload your own images created locally. Always use reliable/verified images.

Upload an image to Docker Hub

docker push kibolho/nginx:latest

Docker Compose

Docker Compose facilitates the definition and execution of multi-container Docker applications (orchestrator). With Compose, you can configure your services in YAML files and deploy your entire stack with a single command. This accelerates development and deployment workflows.

The docker-compose.yml file that orchestrates a MySQL container:

version: '3'

services:

mysql:

image: mysql:8

container_name: mysql

restart: always

environment:

MYSQL_ROOT_PASSWORD: root

MYSQL_DATABASE: app

ports:

- 3306:3306

volumes:

- ./mysql:/var/lib/mysql

We can map a container volume folder to a host folder:

volumes:

- ./mysql:/var/lib/mysql

Or we can just persist in the container without bind to a host folder (anonymous volume):

volumes:

- /opt/node_app/app/node_modules

Also, we can use a :delegated flag that tells Docker to delegate management of this volume mount to the container runtime rather than Docker itself. This allows some performance optimizations.

volumes:

- ./app:/opt/node_app/app:delegated

To run the containers described in the docker-compose.yml file in the background (flag -d):

docker compose up -dTo stop running the containers described in the docker-compose.yml file:

docker compose stop To destroy the containers described in the docker-compose.yml file:

docker compose down Compability Issues

Even if emulated, some x86 images have compatibility issues running on the Arm-based Apple silicon because they contain x86 instructions that cannot be executed on Arm. This depends on the specific app behavior.

The ecosystem is still catching up in terms of providing images with arm64 variants.

A temporary solution is using the platform key in docker-compose.yml, as it allows you to define alternative arm64 compatible images specifically for M1 devices. This avoids emulation and architecture issues.

version: '3'

services:

app:

image: mysql:8

platform: linux/amd64

Tools to generate images

We can use Buildpacks to auto generate the images

Kubernetes

Kubernetes is an open-source container orchestration platform originally developed by Google and now maintained by the Cloud Native Computing Foundation (CNCF). It is used to automate the deployment, scaling, and management of containerized applications across multiple hosts.

Kubernetes has become an essential tool in the development and operation of modern applications. Some of the key reasons why Kubernetes is so important include:

-

Scalability and High Availability: Kubernetes provides advanced features for automatic scaling and load balancing, allowing applications to be quickly scaled up or down as demand changes. This helps ensure the high availability of services.

-

Simplified Container Management: Kubernetes abstracts the complexity of container management, such as host provisioning, resource allocation, and container health monitoring. This allows developers to focus more on application development rather than the underlying infrastructure.

-

Portability and Consistency: Kubernetes provides a consistent platform to deploy applications in different environments, such as on-premises, public cloud, or private cloud. This helps ensure application portability and reduces maintenance costs.

-

Resilience and Disaster Recovery: Kubernetes provides advanced self-healing features, such as automatic container restart and workload redistribution. This improves the resilience of applications and facilitates disaster recovery.

-

Configuration and Security Management: Kubernetes provides robust configuration and security management features, including role-based access control, data encryption at rest and in transit, and separation of concerns between applications and infrastructure.

Overall, Kubernetes has become an essential platform for the deployment and operation of modern applications, especially those based on containers. Its ability to simplify container management, provide scalability and high availability, and ensure application portability and resilience make it a popular choice among development and operations teams.

Amazon EKS (Elastic Kubernetes Service)

Amazon EKS is a managed Kubernetes service provided by Amazon Web Services (AWS). It removes the need to install, operate, and maintain your own Kubernetes cluster, significantly simplifying the provisioning and operation of a Kubernetes cluster on AWS. Some of the key benefits of EKS include:

- Control Plane Management: AWS takes care of provisioning and operating the Kubernetes control plane, including cluster updates and scaling.

- Integration with other AWS Services: EKS integrates well with other AWS services, such as Elastic Load Balancing, Amazon VPC, and AWS Identity and Access Management (IAM).

- High Availability and Resilience: EKS provides high availability and resilience for your Kubernetes cluster, with redundancy across multiple availability zones.

- Security: EKS provides security features, such as data encryption at rest and in transit, and integration with AWS IAM for access control.

Google Kubernetes Engine (GKE)

Google Kubernetes Engine (GKE) is a managed Kubernetes service provided by the Google Cloud Platform (GCP). It also abstracts the complexity of setting up and operating a Kubernetes cluster, offering many of the same benefits as EKS, such as control plane provisioning and operation, integration with other cloud services, and high availability.

Azure Kubernetes Service (AKS)

Azure Kubernetes Service (AKS) is the managed Kubernetes service provided by Microsoft Azure. It also simplifies the configuration and operation of a Kubernetes cluster, with deep integration with other Azure services, such as Azure Active Directory for access control.

DigitalOcean Kubernetes

DigitalOcean Kubernetes is a managed Kubernetes offering from DigitalOcean, a popular cloud platform. It provides an easy way to provision and operate Kubernetes clusters, with integration with other DigitalOcean services.

In addition to these managed Kubernetes services in the cloud, there are also tools for deploying and operating Kubernetes clusters on your own infrastructure, such as Minikube (for local development) and kubeadm (for deploying Kubernetes clusters on Linux hosts).

Overall, these cloud-based Kubernetes solutions significantly simplify the configuration and operation of a Kubernetes environment, allowing teams to focus more on deploying and operating their applications rather than managing the underlying infrastructure.

Conclusion

In summary, Docker revolutionized the development and deployment of applications by introducing a standardized and portable way of packaging environments. Features like Dockerfile, Docker Compose and Docker Hub accelerate workflows and promote reuse, collaboration and consistency.